Hackathon 0x1 – Pimp my Review or the Epic Birth of a Gerrit Plugin

This series of articles describes some of the best realisations made by Intersec R&D team during the 2-day Hackathon that took place on the 3rd and 4th of July.

The goal had been set a day or two prior to the beginning of the hackathon: we were hoping to make Gerrit better at recommending relevant reviewers for a given commit. To those who haven’t heard of it, Gerrit is a web-based code review system. It is a nifty Google-backed open-source project evolving amid an active community of users. We have been using this product here at Intersec since 2011 and some famous software projects also rely heavily on it for their development process.

Our team consisted of five people: Kamal, Romain, Thomas, Louis and Romain (myself).

The battle

Battle plan

- Get a local up-to-date Gerrit server up and running.

- Setup an up-to-date copy of Intersec’s code repository (test & demonstration).

- Understand the way Gerrit plugins work.

- Experiment with various ideas to improve the reviewer recommendation.

- Craft a mind-blowing demo!

Getting started: the catch

As C developers, one single thing, had we known earlier, may have brushed

us off: Gerrit is a Java project. Things were not that bad

though: indeed, we had already been using (quite happily) the

git-blame plugin for a while, so bootstrapping our new plugin

from this one seemed like a not so painful way to go.

A word of caution: installing Gerrit from source can be tedious if you are not a Java developer. For instance, the installation process seems to be much smoother if your Java development toolkit is up-to-date. You also need a whole lot of other tools (ant, buck) that are likely to turn into time guzzlers. So if you ever find yourself downloading the source code with the ambition of compiling it (and even running it), please make sure you really need it in the first place.

After a bit of work we managed to get a Gerrit instance from sources on a computer and a binary installed version of Gerrit with a clone of the repository on a laptop.

So you want to make your own Gerrit plugin?

As specified here, two APIs are available to develop a Gerrit plugin, depending on the level of data you need, you may want to choose one or the other. Finding relevant reviewers seems hard to achieve without a proper access to everyone’s commit/review history, so we went for the more complex API. The cost of this unlimited access is the need to compile Gerrit from source.

Making better suggestions: any suggestion?

Good ideas versus great ideas

Currently, when a new patch is on its way, the git-blame plugin looks up the names of the different committers that have modified the same lines and automatically adds them to the reviewers of the patch. This yields pretty good results already, but it doesn’t necessarily handle well some corner cases. For instance, when a new file is created, or if new code is added, there might be no result.

A simple way to go one step further would be to also include previous reviewers of the same file into consideration. This is a very attractive idea, but one can spot consequent caveats immediately: for instance, if one is dealing with a very large file, potential reviewers that are actually not familiar with the specific code under review might be chosen.

Actually, when it comes to better reviewing suggestions, ideas are plentiful and tend to shoot in every direction. For each new idea flowing in, counter-examples can be pointed out immediately, bringing in other new ideas. Thinking of our task as a ranking problem helps a lot: gathering a list of potential reviewers, sorting them according to certain weights (defined by some criteria that can be tuned) and suggesting the highest scores as reviewers: this is going to be our ambition from now on!

Tell me how you review and I’ll tell you who you are

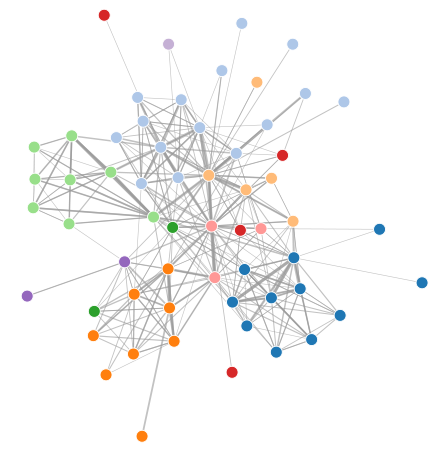

Graphs can be an interesting way to visualize the reviewer/committer relationship. The following figure illustrates something that is intuitively easy to foresee. Indeed, if it seems like most people are not making any review outside of their own team, one can also notice that a handful of developers (nodes lying at the center of the graph) appear to be universal reviewers.

Let me get this straight: universal reviewers are a blessing for any software development team. But they also carry a huge threat to the relevance of our idea. Indeed, if we were to apply our idea without any further thought, we’d probably end up introducing some harmful mechanisms such as suggesting reviewers solely based on past reviews, therefore fostering the reproduction of current behaviors.

But keep in mind that if a company sometime needs heroes, it can’t rely on them, because in real life…

Back to our problem, we need to define a weight to select the most suitable reviewers for a given commit. Beside taking into account the past contributions made by a potential reviewer to the targeted piece of code (as a reviewer or as a committer), this weight also has to balance the workload of this reviewer by adapting to his reviewing habits. So we eventually proposed a set of three parameters to adapt the weight for the selection of reviewers.

- weightBlame ( default value 1), parameter reflecting the importance of a reviewer having modified the code.

- weightLastReviews ( default value 1), relative importance of someone having reviewed the same code in the past.

- weightWorkload ( default value -1), relative importance of the workload of a potential reviewer.

Eventually the final weight is given by:

finalWeight = weightBlame * nb_lines_blame + weightLastReviews * nb_reviews_on_same_file + weightWorkload * nb_incoming_reviews

The parameter maxReviewers ( = 3) was also added to allow the user to set the number of desired suggestions.

So, if our plugin is used without modifying the default parameters, it should suggest at most 3 reviewers, giving the priority to those who are familiar with the concerned piece of code (reviewer and/or committer) and whose workload is as low as possible. Of course, these are default values, and a test phase is needed to suit the specificity of the software development team.

What’s next?

Well, for now, the plugin is available on Github here. In the future, we might add some nice features and submit the plugin to the Gerrit project. We’ll keep you posted!

Special Thanks

Our Chief Scientist was kind enough to spend a good chunk of the night helping us with the setup, and we would like to thank him for that. His biggest contribution though was to introduce us to RoboGeisha, a Japanese masterpiece of nonsense and goofiness.