Hackaton 0x0b — Pimp My Recruitment

At Intersec we love hiring new talents, especially new C developers who can reinforce our teams.

And of course the candidates have to face a technical challenge so that we can assess their technical skills.

This is a typical step in the recruitment process that can become very time consuming for the reviewers and stressful for the candidates. Pimp My Recruitment is an initiative to help automatize this whole process, both for the candidate and the reviewer.

To achieve this we worked on three areas:

- Automation of C code review

- Plagiarism detection

- The recruitment process

Automation of the code review

A challenging coding exercise in C is given to the candidates so that

reviewers can have an understanding of their skills and decide whether they

should be interviewed or not.

The reviewing process consists of repetitive tasks and we would like to

automate them.

We made a script to do most of the heavy lifting:

- check the output of a binary

- check that valgrind does not detect leaks, double frees, etc.

- check that performances are within reasonable limits

We regrouped the results of these tests into the following categories:

- basic functionality

- rules respected

- memory leaks

- performance

We gave a grade to each category so that the reviewer can have a good understanding of the strong points as well as the weak points. Here is a mockup output:

basic fonctionnality test 1/1

test_basic SUCCESS

rules respected tests 1/2

test_simple_rules SUCCESS

test_hard_rules FAILURE

memory leak tests 2/2

test_small_sample SUCCESS

test_large_sample SUCCESS

performance tests 2/2

test_simple_perf SUCCESS

test_hard_perf SUCCESS

TOTAL: 6/7

A quick look at this grade sheet is enough for a reviewer to make an easy and fast decision on whether or not they should spend more time reviewing a candidate’s solution.

Detect source code plagiarism

We consider that honesty is the best policy and we like candidates who take the challenge seriously.

Out of respect for the candidates spending their time working on their submission and because fraudulent submissions are flagged during the interview with our expert, we decided to tackle the plagiarism suspicion issue to save the time of all parties and add this feature to the project.

This hackathon project aims at finding a way to compare the candidate’s submission with our database of submissions, to eliminate fraud suspicion (or at least detect blatant copies automatically). We looked at different ways of doing this, from comparing the syntax trees to looking into machine learning, but they were either inefficient or too complicated for the scope of this hackathon.

One solution that stood up against all the others was one used broadly in the academic field (to detect plagiarism between students for example) called Moss (Measure Of Software Similarity), an automatic system for determining the similarity of programs. It was written in 1994 by Alex Aiken, a professor at Stanford University, who lost the source code since. The idea behind Moss has been published as a research paper called “Winnowing: Local Algorithms for Document Fingerprinting”.

This algorithm allows us to obtain fingerprints from the source code in a few clever steps:

- Normalization of the source code to remove unwanted characters from the input

- Data sampling and fingerprinting on the normalized code using a simple checksum

- Window selection gathering the fingerprinted samples, using the lowest checksum as the identifier of the window

Through this work we were able to compare the candidate’s source code to others. We compared the checksums of windows from one file to another and highlighted the lines suspected of plagiarism. The results were surprisingly accurate.

We used an Open Source implementation of the Winnowing algorithm developed in C by scanoss on GitHub , and you can read more about the algorithm in this research paper .

With a bit more time we could have implemented the automatic comparison to a whole database of source codes, and wire it to the Web interface presented to you to have a complete all-in-one solution to review the candidates' submissions.

Recruitment process

Right now the recruitment process leading to the interview is tedious:

- The recruitment manager sends a mail to the candidate with the subject

- The candidate replies to the recruitment manager with an archive containing their solution

- The recruitment manager posts a link of the archive on Slack

- A reviewer posts a review on Slack and our applicant tracking system

This means a lot of scattered pieces of information. As a result, the recruitment manager or reviewers can sometimes lose track of the status of a candidate’s application. Candidates have no feedback while writing up their solutions and have little to zero means of asking questions.

We worked on a mockup of an online platform that would help us streamline that process. Most importantly, it should better support candidates. No other platform does what we do: review a full project completed with the candidates' own development tools. Its purpose is to provide candidates and reviewers with a single place and a set of tools to achieve that.

The workflow could be streamlined like this:

- The recruitment manager creates an account for the candidate

- The recruitment manager gives the candidate access to the platform

- The candidate logs into the platform, gets the subject and can test the solution on the platform before submitting it

- The reviewer can access pending reviews and make comments on them that are then passed to our applicant tracking system

This way all the information leading to the interview would be centralized in one place visible to Intersec staff members involved in the recruitment process.

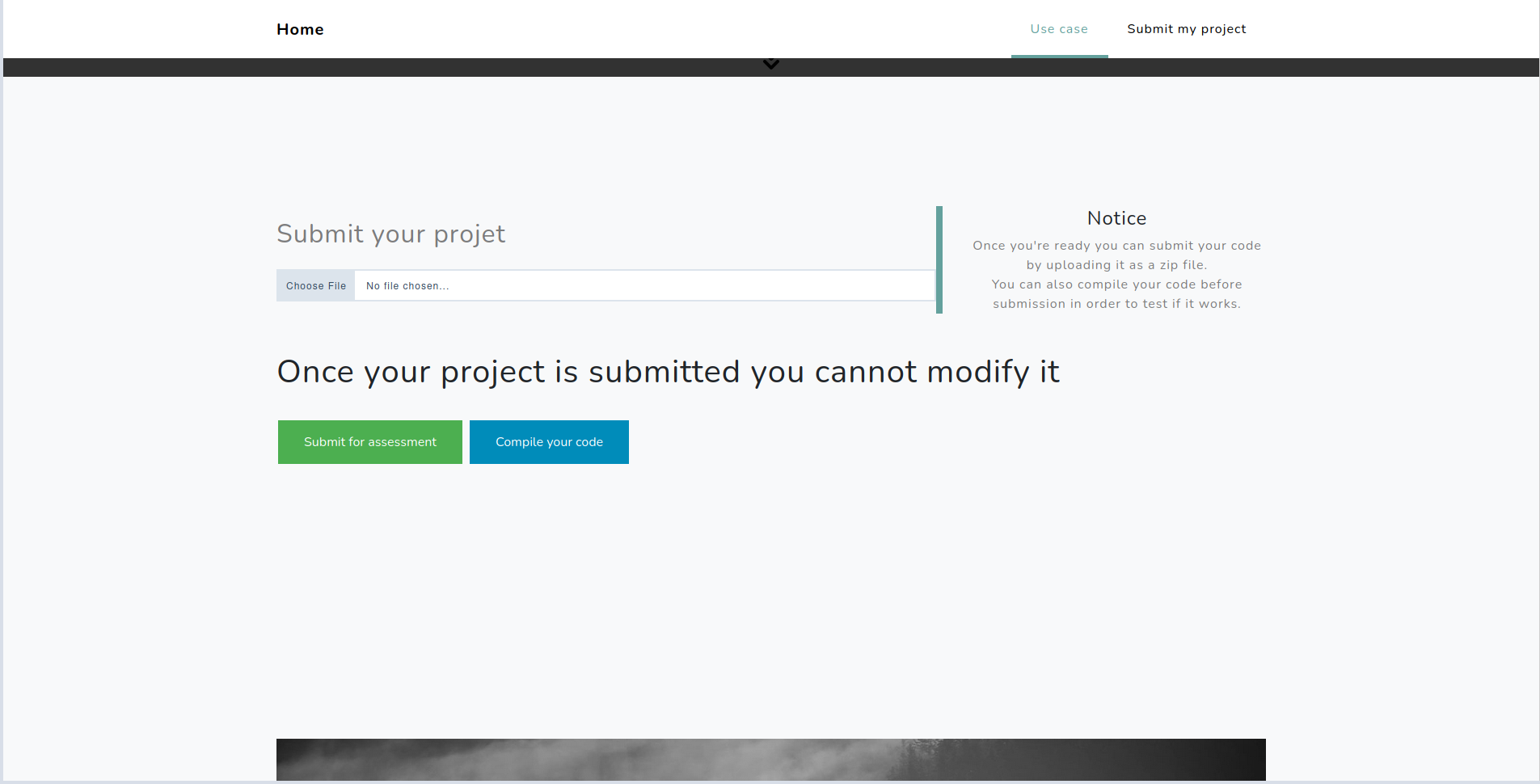

Candidate view

Candidates land on a page where they can either compile their solution or submit it. This is inspired by online test platforms such as hackerrank, leetcode or codingame.

The key difference here is that the candidate has to upload an archive instead of writing code in an online editor. This is important to us because we want the candidate to work on their own development editor and tools.

The “Compile your code” button allows the candidate to upload their archive to our platform so that they can have the following feedback:

- Whether the solution compiles on our machine

- Whether the solution meets basic requirements of the subject

The “Submit your code” button allows the candidate to submit their solution for review.

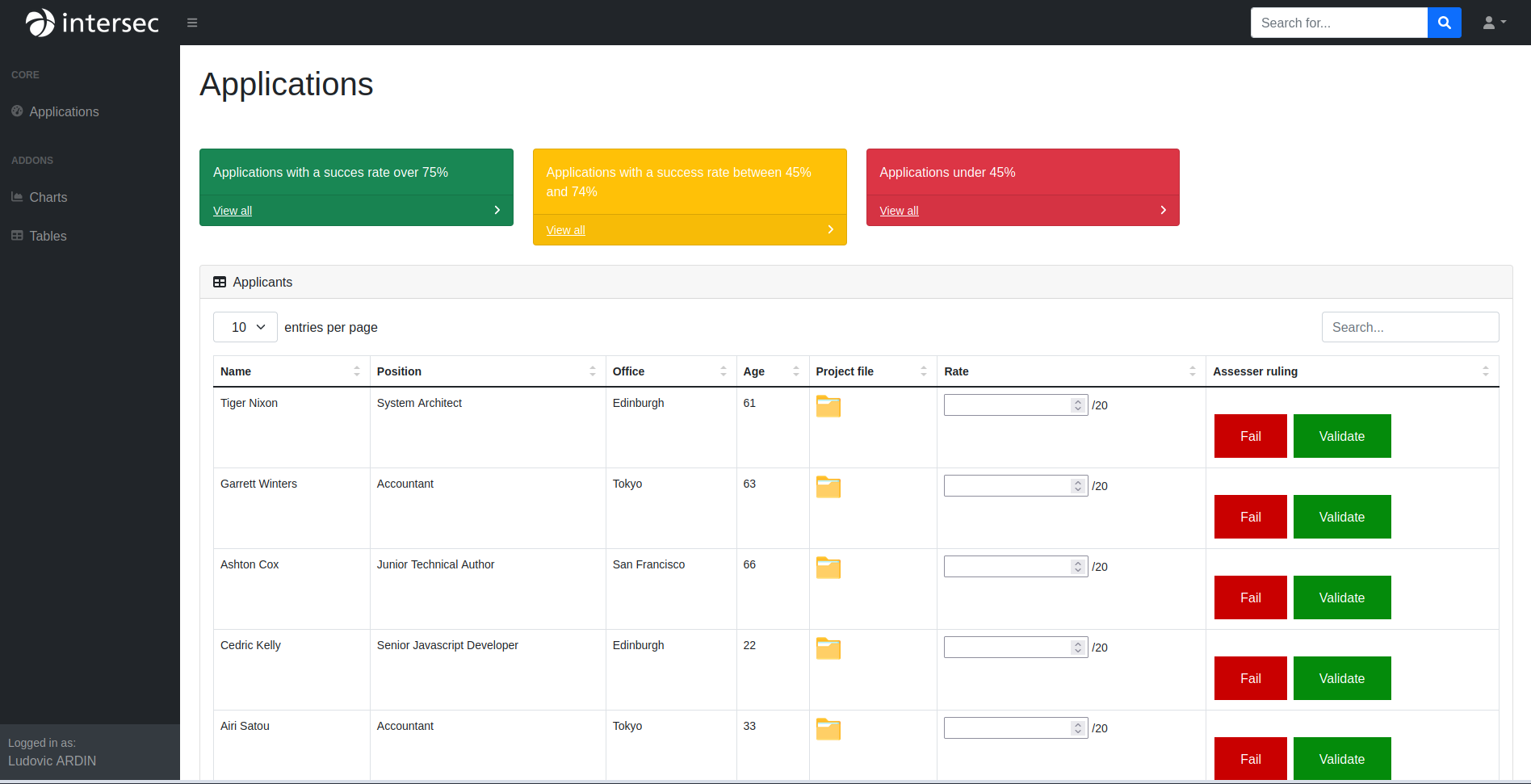

Reviewer view

The reviewers would land on a dashboard:

This dashboard would present all the candidates pending for review as well as previous candidates whose tests have already been reviewed.

To sum up

The ultimate goal is to have a platform that centralizes data and plays nice

with our already existing talent tracking platform.

On top of that, automating the functional part of code reviews as well as

adding a plagiarism checker will help reviewers tremendously in their work.

Team members: Yossef Rostaqi, Elie Duleu, Jeremy Caradec and Ludovic Ardin